From a YouTube tutorial showing how ChatGPT can "write a systematic review [in] under 1 hour" to reports of the program's fabrication of fake citations, recent media coverage of ChatGPT demonstrates a wide range of both promise and peril for its use in library research and writing tasks. We understand the interest in automating and streamlining these tasks: it is time consuming and difficult to find relevant articles, synthesize knowledge, and put it all into writing. So when can ChatGPT help and when might it lead you astray?

First, some background:

ChatGPT, a chatbot from the company OpenAI, is one example of generative artificial intelligence (AI) based on large language models (LLMs). Google's Bard program is similar. These generative text tools are predictive language models trained on source material consisting of open content from the internet. They are not discovery tools or search engines. When a user types a prompt or question, the tools respond by generating text content based on statistical regularities learned from the source material. They are effectively paraphrasing that source material by generating words in an order that makes algorithmic sense.

Strengths of ChatGPT and other generative AI for writing and library research:

The interactive and conversational structure of ChatGPT can help to stimulate thinking about a topic or to inform prewriting activities. It could be used to generate initial content that you can analyze, adapt, and revise based on your own knowledge and reading. In this way, it can help you to refine a writing or research idea in its early stages or to overcome writer's block or the fear of the blank page.

Limitations of ChatGPT and other generative AI for writing and library research:

The source material used to train ChatGPT and other tools has limitations: it is not current, meaning some information may be incorrect or not up to date. The free version of ChatGPT (version 3.5) is based on source material that ended in September 2021, thus it cannot incorporate events or information after that date. It can contain some inaccuracies, biased information and misinformation. It does not include scholarly sources, such as academic journal articles and books that are behind subscription paywalls. Finally, ChatGPT and other tools do not analyze, validate, or assess source material for accuracy.

ChatGPT is also known to fabricate references. This is a natural result of it being a predictive text model as opposed to a search engine. It is typically not providing you with a real article citation. Libraries are receiving requests for articles that do not exist but that sound like real references: these are being generated by ChatGPT and do not exist. ChatGPT users should verify any citations provided by ChatGPT in a library database or e-journal.

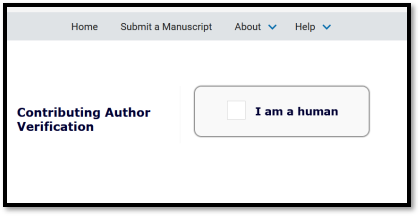

In addition to these technical limitations, it is essential to note that using content from ChatGPT without attribution generally constitutes cheating or a research integrity violation. Academic assignments and published manuscripts require the author to be fully responsible for the content. ChatGPT cannot take responsibility and therefore cannot be an author. Most academic programs require that any use of ChatGPT for an academic assignment be cited explicitly and transparently.

This is parallel to the requirements and best practices for many major academic journals. Some journals, such as Nature and JAMA, prohibit the use of AI tools to author scientific articles but allow some use of these tools in prewriting activities if properly credited. Other journals do not allow any use of generative AI.

Recommendations

Overall, ChatGPT and other generative AI can be helpful in prewriting or early research tasks, such as refining a topic or brainstorming an organizational outline for a paper. However, these tools cannot do your intellectual work of research and writing, particularly when the goal of an academic or research manuscript is to describe your activities and/or thinking.

If you use ChatGPT or other tool, be sure to describe how you used it in a citation or note. An example citation is “This assignment used ChatGPT/AI tools in brainstorming and conception of my work.” Authors using AI at any point in the research and manuscript writing process should review journal policies and instructions for authors closely and should be prepared to disclose all AI contributions.

Double-check any citations that ChatGPT suggests by searching for the article and journal titles in a library database or e-journal site.

We encourage you to explore ChatGPT and to read more about its use. Here are some recommended reading to get you started:

Real world experiments in research and writing with ChatGPT:

- On summarizing articles, books, etc.: "This ChatGPT feature has huge potential—but really needs work" by Doug Aamoth and posted on Fast Company. 2/22/23.

- On doing research: "Guest Post — Artificial Intelligence Not Yet Intelligent Enough to be a Trusted Research Aid" by Richard De Grijs and posted on the Scholarly Kitchen. 4/27/23.

Use of ChatGPT for articles in scholarly journals

- Tools such as ChatGPT threaten transparent science; here are our ground rules for their use. Nature. 2023 Jan;613(7945):612. doi: 10.1038/d41586-023-00191-1.

- Flanagin A, Bibbins-Domingo K, Berkwits M, Christiansen SL. Nonhuman "Authors" and Implications for the Integrity of Scientific Publication and Medical Knowledge. JAMA. 2023 Feb 28;329(8):637-639. doi: 10.1001/jama.2023.1344.

- Thorp HH. ChatGPT is fun, but not an author. Science. 2023 Jan 27;379(6630):313. doi: 10.1126/science.adg7879.

Duke guidance

- AI and Teaching at Duke, from Duke's Learning Innovations